Encountering the “Runtimeerror: GPU is required to quantize or run quantize model” can be frustrating, especially when you’re deep into a machine learning project. This error often indicates that your system lacks the necessary GPU resources to perform quantization tasks.

The “Runtimeerror: GPU is required to quantize or run quantize model” indicates that your system lacks the necessary GPU resources for model quantization. To resolve this, ensure you have a compatible GPU, update drivers, and check your machine learning framework settings for proper GPU utilization.

This article provides you complete solution of this error, its sigs and what you need to do to slove this problem.

What Does It Mean Runtimeerror?

A “Runtimeerror” in programming signifies an error that occurs while a program is running, rather than during its compilation. This type of error typically happens due to issues such as invalid operations, missing resources, or incompatible hardware and software configurations, which disrupt the program’s execution.

What Does The Error “Runtimeerror: Gpu Is Required To Quantize Or Run Quantize Model.” Mean?

The error “Runtimeerror: GPU is required to quantize or run quantize model” means your system lacks the necessary GPU resources to perform or execute the quantization process. Ensure you have a compatible GPU, update drivers, and configure your machine learning framework to use the GPU properly.

Common Causes Of The Runtime Error :

Inadequate Hardware:

Lack of a compatible GPU or insufficient GPU resources can trigger this runtime error. Quantization processes often require high computational power, and without a capable GPU, the model cannot be executed or quantized effectively.

Misconfigured Environment:

Incorrect software configurations, such as wrong library versions or misaligned paths, can cause the runtime error. Ensuring that all software components and environment variables are correctly set up is crucial for smooth execution.

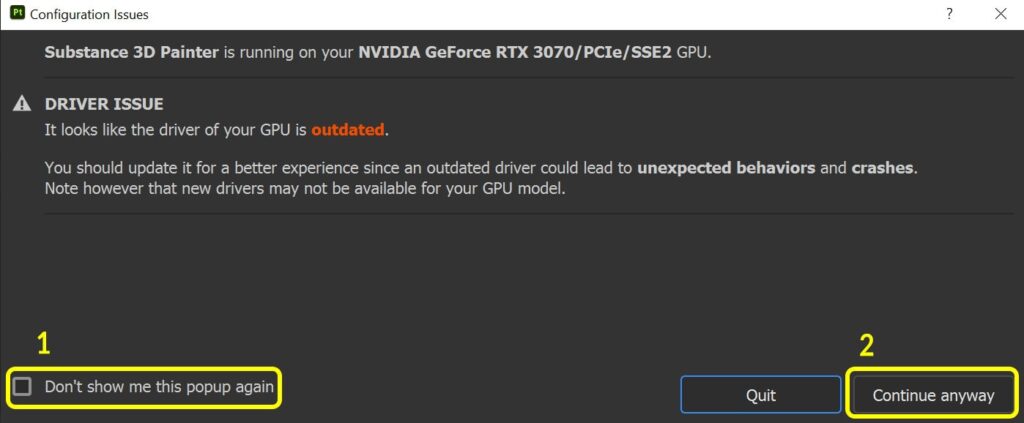

Outdated GPU Drivers:

Using outdated or incompatible GPU drivers can lead to this error. GPU drivers need to be updated regularly to ensure compatibility with the latest software and to handle tasks like quantization effectively.

Incompatible Software Versions:

Mismatched versions of machine learning frameworks and GPU libraries can trigger the error. Ensure that your software versions are compatible with your GPU to avoid such runtime issues and ensure proper functionality.

Lack of GPU Support in Software:

Some software or machine learning frameworks may not support GPU acceleration for certain tasks. Verify that the software you are using supports GPU functionality for quantization or model execution.

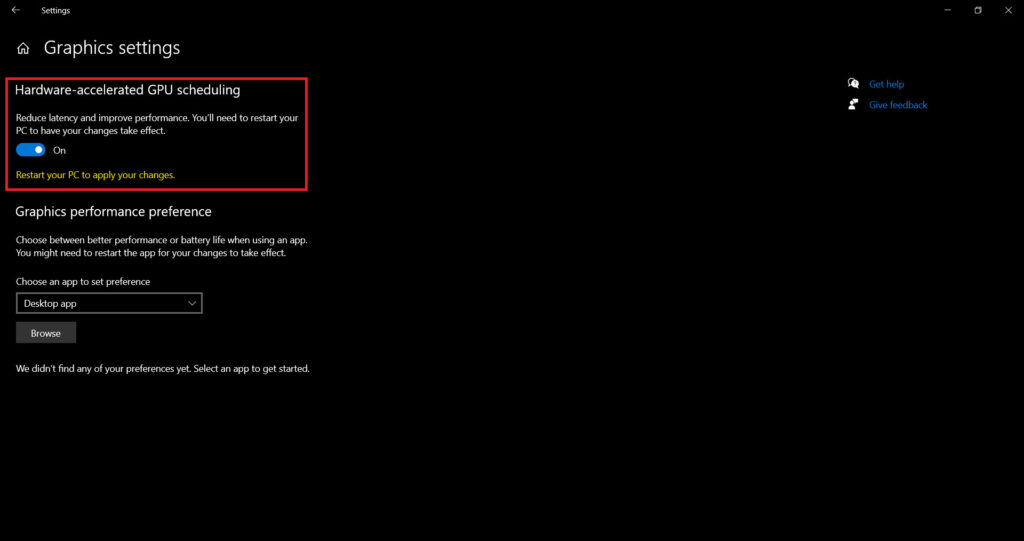

Hardware Acceleration Not Enabled:

If hardware acceleration is disabled in your system settings or software configuration, it can cause this runtime error. Make sure that GPU acceleration is enabled and properly configured in your system settings.

Inadequate System Resources:

Insufficient system memory or processing power can contribute to this error. Ensure your system has enough resources to handle GPU operations and quantization tasks to avoid runtime disruptions.

Misconfigured Model Parameters:

Incorrect model parameters or settings can cause issues during quantization or execution. Verify that your model configuration aligns with the requirements for GPU utilization and quantization processes.

Steps to Resolve the Runtime Error:

Upgrade Your Hardware:

Ensure your system has a compatible GPU with adequate resources. If not, consider upgrading to a GPU that meets the requirements for quantization and model execution.

Update GPU Drivers:

Regularly update your GPU drivers to the latest version. This ensures compatibility with your software and resolves any issues related to outdated or incompatible drivers.

Check Software Compatibility:

Verify that your machine learning framework and libraries support GPU acceleration. Ensure that you are using compatible versions of all software components.

Configure Your Environment:

Correctly configure your software environment to utilize the GPU. Set up environment variables and paths properly to ensure that the GPU is recognized and used for quantization.

Enable Hardware Acceleration:

Make sure hardware acceleration is enabled in both your system settings and software configurations. This allows the GPU to be utilized effectively for quantization tasks.

Optimize System Resources:

Ensure your system has sufficient memory and processing power to handle GPU tasks. Free up resources or upgrade system components if necessary to avoid runtime errors.

Verify Model Parameters:

Double-check that your model parameters and settings are configured correctly for GPU utilization. Misconfigured parameters can cause issues during quantization or execution.

Use Cloud-Based Solutions:

If upgrading hardware isn’t feasible, consider using cloud-based services with GPU support. Platforms like Google Colab or AWS offer GPU resources for model quantization and execution.

Which Gpu Drivers Should I Install To Resolve “Runtimeerror: Gpu Is Required To Quantize Or Run Quantize Model.”?

To resolve the “Runtimeerror: GPU is required to quantize or run quantize model” error, install the latest GPU drivers compatible with your graphics card. For NVIDIA GPUs, use the NVIDIA GeForce drivers or CUDA drivers.

For AMD GPUs, use the AMD Radeon drivers. Ensure drivers are up-to-date to ensure compatibility with your machine learning framework.

How Can I Configure My Environment Variables To Resolve The “Runtimeerror: Gpu Is Required To Quantize Or Run Quantize Model” Error?

To resolve the “Runtimeerror: GPU is required to quantize or run quantize model” error, configure environment variables by adding GPU library paths to the PATH variable and setting CUDA_HOME to your CUDA installation directory. Restart your system to apply changes.

Future Trends In Quantization And GPUs:

Future trends in quantization and GPUs focus on improving AI efficiency through advanced quantization techniques, enhancing hardware performance, and reducing energy consumption. Innovations include dynamic precision scaling, specialized hardware accelerators, and integration with emerging AI models for faster, more efficient computing.

How Can I Quantize A Model For Cpu?

Use PyTorch or TensorFlow quantization tools to convert a model to a lower precision format like INT8. Specify the target device as CPU during the quantization process.

Can You Run A Quantized Model On Gpu?

Yes, you can run a quantized model on a GPU. Quantized models can be executed on GPUs that support lower precision arithmetic, such as INT8, for improved performance and efficiency.

65b Quantized Model On Cpu?

Running a 65 billion parameter quantized model on a CPU is challenging due to limited memory and computational power. It’s generally recommended to use a GPU for such large models to achieve acceptable performance.

What Does It Mean To Quantize A Model?

Quantizing a model involves reducing the precision of its weights and activations, typically from floating-point (FP32) to a lower precision format like INT8, to reduce memory usage and improve inference speed.

How To Quantize A Pretrained Model?

To quantize a pretrained model, use frameworks like PyTorch or TensorFlow that provide quantization APIs. Apply techniques such as post-training quantization or quantization-aware training to convert the model to a lower precision.

What Does Cuda_visible_devices Do?

CUDA_VISIBLE_DEVICES is an environment variable that controls which GPUs are visible to CUDA applications. It allows users to specify which GPUs to use for a particular computation.

Can Outdated Gpu Drivers Cause The “Runtimeerror: Gpu Is Required To Quantize Or Run Quantize Model.” Error?

Yes, outdated GPU drivers can cause this error. Ensure your GPU drivers are up to date and compatible with the CUDA version required by your machine learning framework.

Why Do Some Functions In Pytorch Require A Gpu?

Some functions in PyTorch require a GPU to leverage the parallel processing capabilities and speed of GPUs, particularly for tasks involving large-scale matrix computations and deep learning operations.

Can The “Runtimeerror: Gpu Is Required To Quantize Or Run Quantize Model” Error Occur In Tensorflow?

Yes, this error can occur in TensorFlow when attempting to quantize or run a quantized model on a system without a properly configured or accessible GPU.

How To Load A Quantized Model?

Load a quantized model using the appropriate framework’s API, such as torch.load in PyTorch or tf.saved_model.load in TensorFlow. Ensure the environment supports the quantized operations for correct execution.

Does Quantization Work On Cpu?

Yes, quantization works on CPUs. Frameworks like PyTorch and TensorFlow provide support for quantized operations on CPUs, allowing models to run efficiently on devices without GPUs.

What Is The Purpose Of Quantize?

The purpose of quantization is to reduce the computational complexity and memory footprint of a model by lowering the precision of its parameters and operations, thereby improving inference speed and efficiency.

What Are The Disadvantages Of Quantization?

Disadvantages of quantization include potential loss of model accuracy due to reduced precision, increased quantization error, and compatibility issues with certain hardware or operations not supporting lower precision arithmetic.

Does Quantization Improve Speed?

Yes, quantization typically improves inference speed by reducing the computational load and memory bandwidth requirements. Lower precision arithmetic operations are faster to execute, leading to quicker model predictions.

How Do You Reduce Quantization Error?

Reduce quantization error by using techniques like quantization-aware training, which adjusts the model during training to compensate for the lower precision, and by carefully choosing the quantization scheme and calibration method.

Does Model Quantization Affect Performance?

Yes, model quantization affects performance. While it improves speed and reduces memory usage, it can also lead to a drop in accuracy. Balancing these trade-offs is key to effective quantization.

FAQ’S:

1. What Is The Problem Of Quantization?

The main problem of quantization is the potential degradation of model accuracy due to the reduced precision of weights and activations. This can result in significant performance loss in some cases.

2. How Do I Set Up My Environment Variables To Fix The “Runtimeerror: Gpu Is Required To Quantize Or Run Quantize Model.” Error?

Set the CUDA_VISIBLE_DEVICES environment variable to specify the GPU to use. Ensure that your environment is correctly configured with the necessary GPU drivers and libraries to support quantization.

3. What Is Quantization In Machine Learning?

Quantization in machine learning is the process of reducing the precision of a model’s weights and activations, typically converting from 32-bit floating-point to lower precision formats like 8-bit integer, to optimize performance.

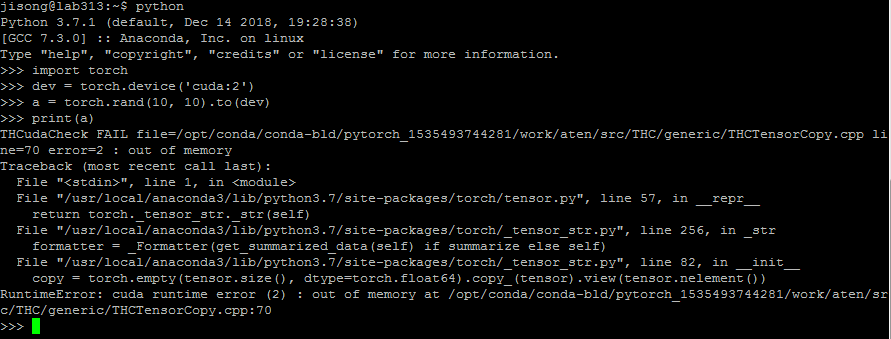

4. What Code Changes Are Needed To Use A Gpu In Pytorch?

To use a GPU in PyTorch, move tensors and models to the GPU using

.to('cuda') or .cuda(). Ensure CUDA is available and configure the device accordingly in your code.

5. Can I Use Cloud Services To Resolve “Runtimeerror: Gpu Is Required To Quantize Or Run Quantize Model.”?

Yes, cloud services like AWS, Google Cloud, and Azure offer GPU instances that can be used to resolve the error by providing the necessary hardware for quantization and running quantized models.

6. When Do You Get The “Runtimeerror: Gpu Is Required To Quantize Or Run Quantize Model.” Error?

This error occurs when attempting to quantize or run a quantized model on a system without a GPU, or when the GPU is not properly configured or accessible by the machine learning framework.

7. What Should I Do If My System Doesn’t Have A Gpu?

If your system lacks a GPU, consider using cloud-based GPU instances, or optimize your model for CPU execution by using CPU-specific quantization techniques and efficient computational libraries.

8. How Do I Move A Tensorflow Model To A Gpu?

To move a TensorFlow model to a GPU, ensure TensorFlow is installed with GPU support, then place your model and data on the GPU using with tf.device(‘/GPU:0’): context manager.

9. What Version Of Pytorch Should I Install To Avoid The “Runtimeerror: Gpu Is Required To Quantize Or Run Quantize Model.” Error?

Install a version of PyTorch that supports CPU-only quantization, such as the latest stable release, and ensure it includes all necessary CPU quantization libraries and dependencies.

10. Is There A Way To Test If My Gpu Setup Is Correct?

Test your GPU setup by running a simple PyTorch or TensorFlow script that performs tensor operations on the GPU. Check for successful execution without errors and monitor GPU utilization.

Wrap Up:

The error “Runtimeerror: GPU Is Required To Quantize Or Run Quantize Model” indicates that the system lacks an accessible GPU necessary for model quantization or execution of quantized models. This often arises from attempting to perform these tasks on a CPU-only setup or improperly configured GPU environment. Resolving it involves ensuring proper GPU availability, updating drivers, or using cloud-based GPU services to perform the required operations.